Machine Learning Terms

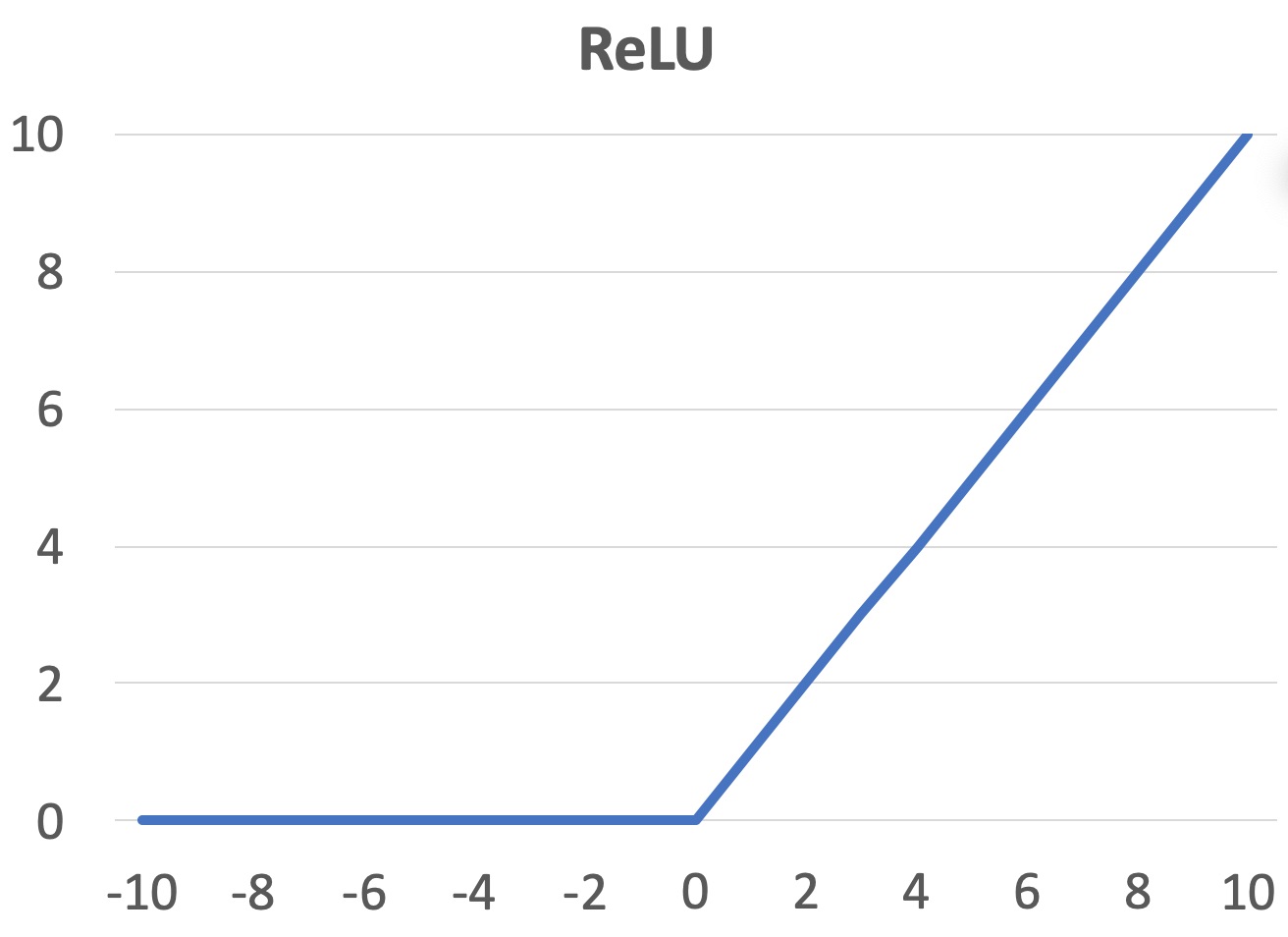

Rectified Linear Unit (ReLU)

By Vladimir Haltakov

A simple function of x returning 0 if x < 0 or x otherwise.

ReLU is a popular activation function in deep neural networks because it is fast to compute and helps avoid the vanishing gradients problem.

Back to Machine Learning Terms